Why Automated Accessibility Testing Isn't Enough

Understanding all the human squishiness that goes into making digital products inclusive

In a world of constantly emerging and changing technologies, it is (and has been) important to ensure digital products are accessible. According to the United States Census Bureau, 25% of US adults have a diagnosed and disclosed disability. Adhering to accessibility standards is not only the law but ultimately, good accessibility benefits everyone.

It’s easy to default to automated tools. Don’t get me wrong, they do come in handy in a pinch—however this isn’t a rant about how automated accessibility tools are awful and how you should never use them.There are many reasons (not just limited to the ones below) as to why we should still take the additional time and money to slow our roll and manually assess the digital landscape we’re constructing.

Automation can be inconsistent and sometimes lead to false positives

Built-in-browser tools are convenient, but they don’t always give you the correct answers. Infamously, Lighthouse has given 100% scores to inaccessible websites.

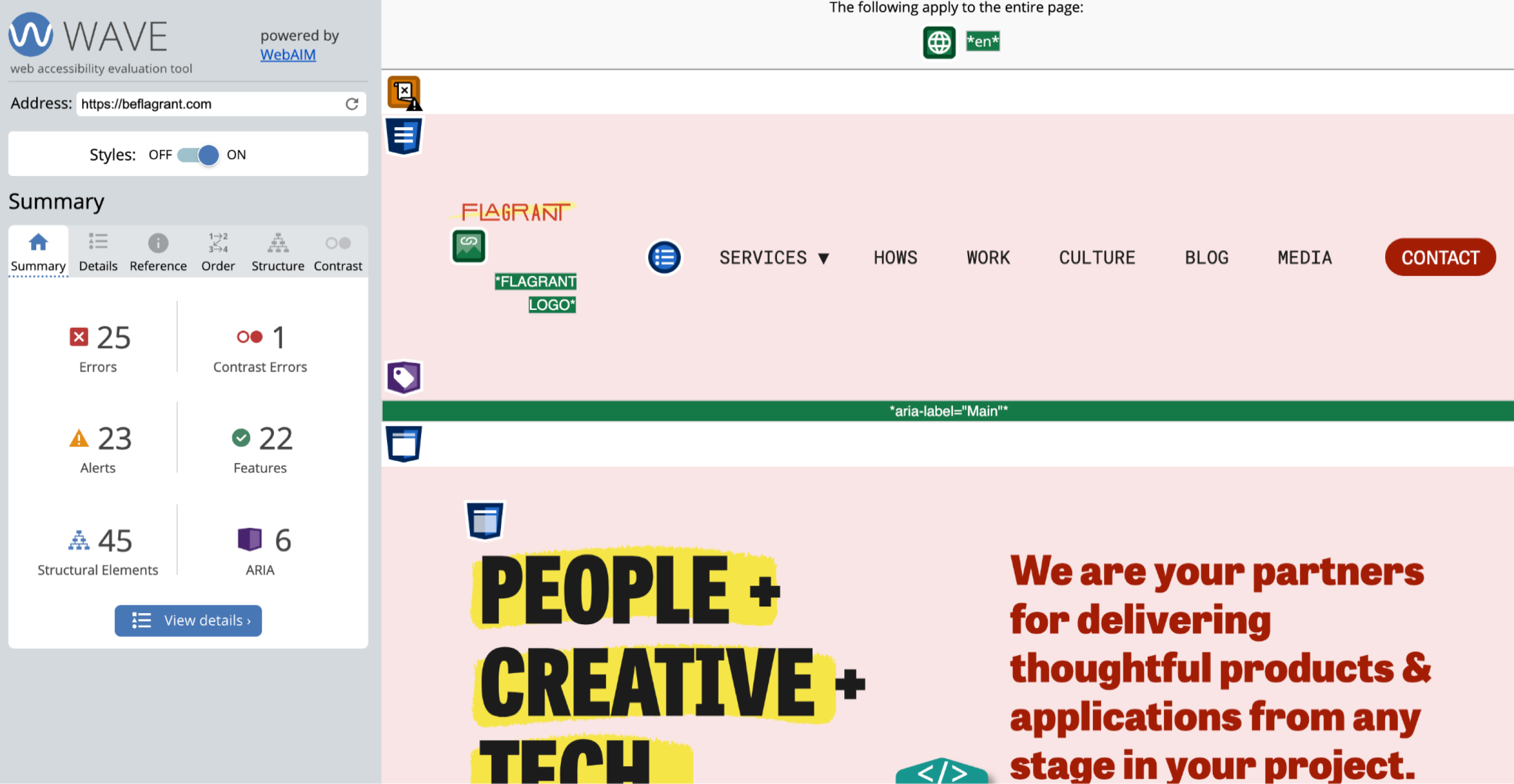

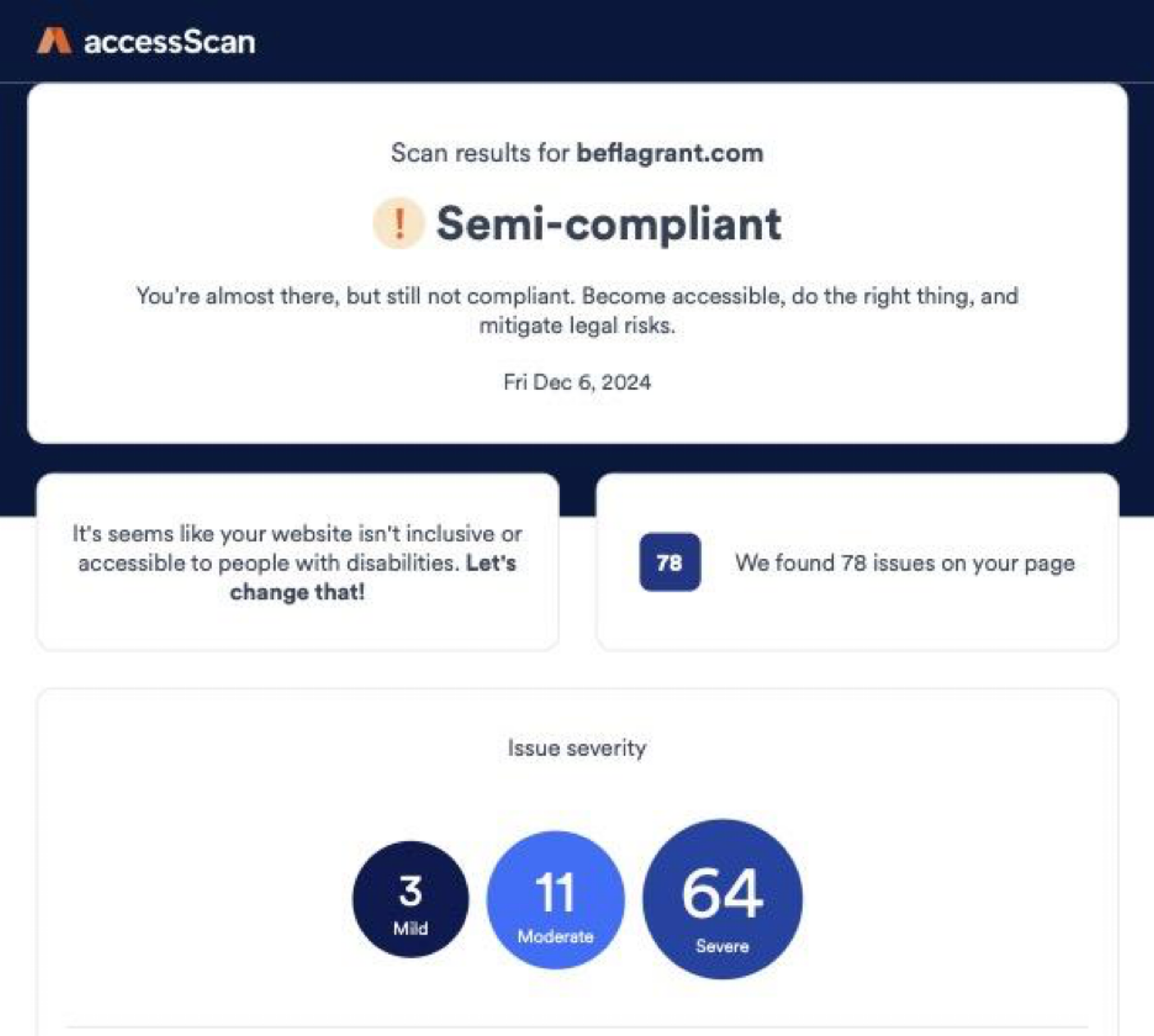

Look at these reports from three different automated accessibility checkers: WAVE, accessScan, and Lighthouse. I’ve used these checkers to test the Flagrant website and each has disparate results ranging from a couple of errors to 78 errors. The validity and thoroughness of an automated accessibility report essentially hinges on which tool(s) you use to assess your digital products.

![Lighthouse report of the Flagrant homepage says the page has has an accessibility score of 90 and that "These checks highlight opportunities to improve the accessibility of your web app. Automatic detection can only detect a subset of issues and does not guarantee the accessibility of your web app, so manual testing is also encouraged." Below, the reports shows different sections of accessibility errors including "Names and labels: Image elements do not have [alt] attributes. These are opportunities to improve the semantics of the controls in your application. This may enhance the experience for users of assistive technology, like a screen reader" and "Contrast: Background and foreground colors do not have a sufficient contrast ratio. These are opportunities to improve the legibility of the content."](/images/uploads/whyaccessibilitytestingisntenough-3.png)

Can’t dive into nuanced or complex designs and interactions

Automated tools are great for metrics-driven testing such as color contrast and alt text. Where it falls short is when you start peeling that onion into the criss-crossy messiness that are digital products.

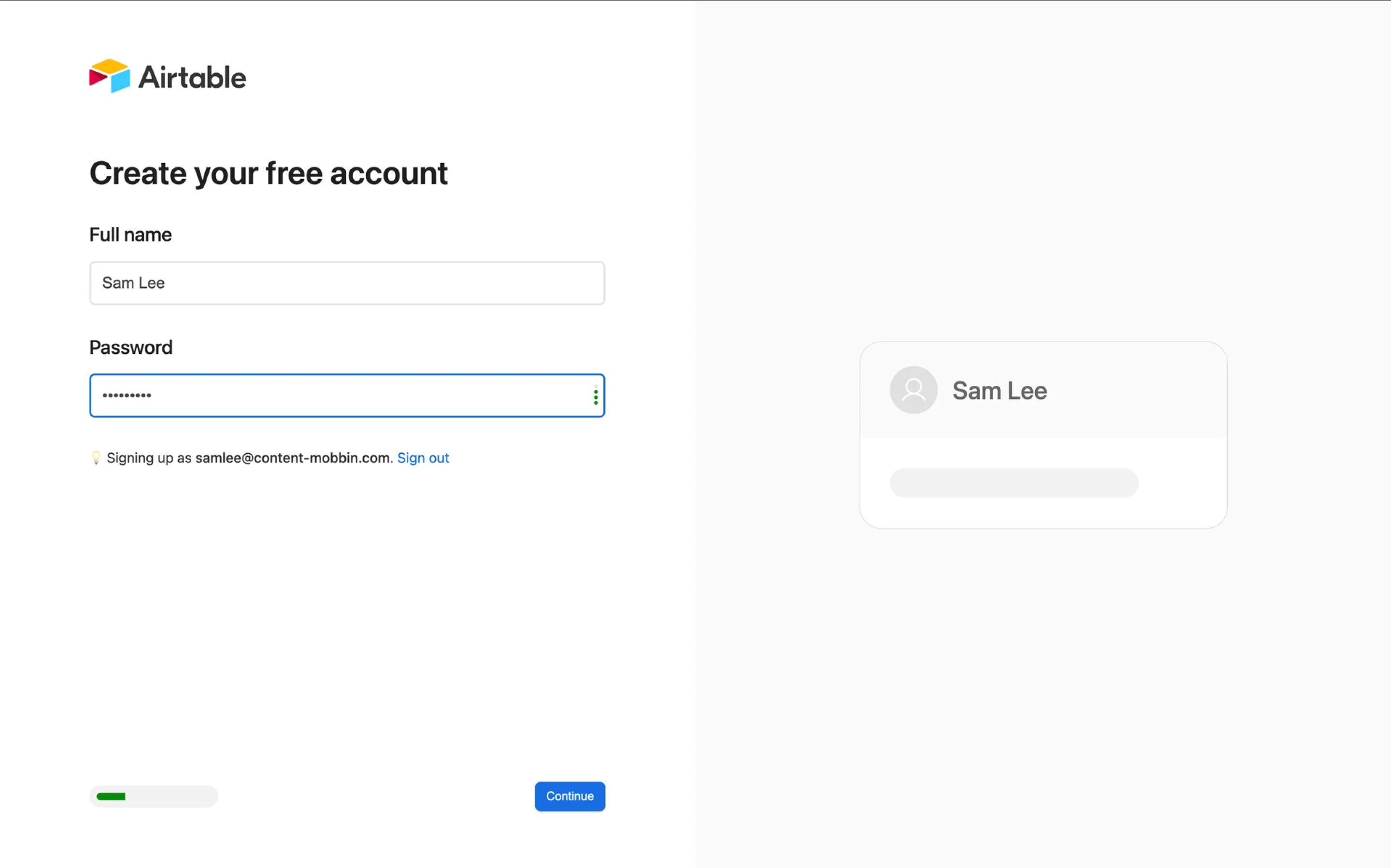

Let’s take a look at some screens. I’m going to use Airtable as an example (no affiliation, I simply stan really nicely designed tables).

Looking first at the sign up page—it’s simple, just a handful of text fields and other visual elements. I’m sure an automated tool could properly assess to see if all the states like focus and hover exist, if the text is big enough, or if the color contrast is sufficient.

Automated testing works in a binary fashion. You either meet the criteria or miss the mark completely. Pass or fail. What automated testing can’t tell you is cases like if the alt text for the image on the right is clear and informative, or if there’s proper error and focus states for the text fields.

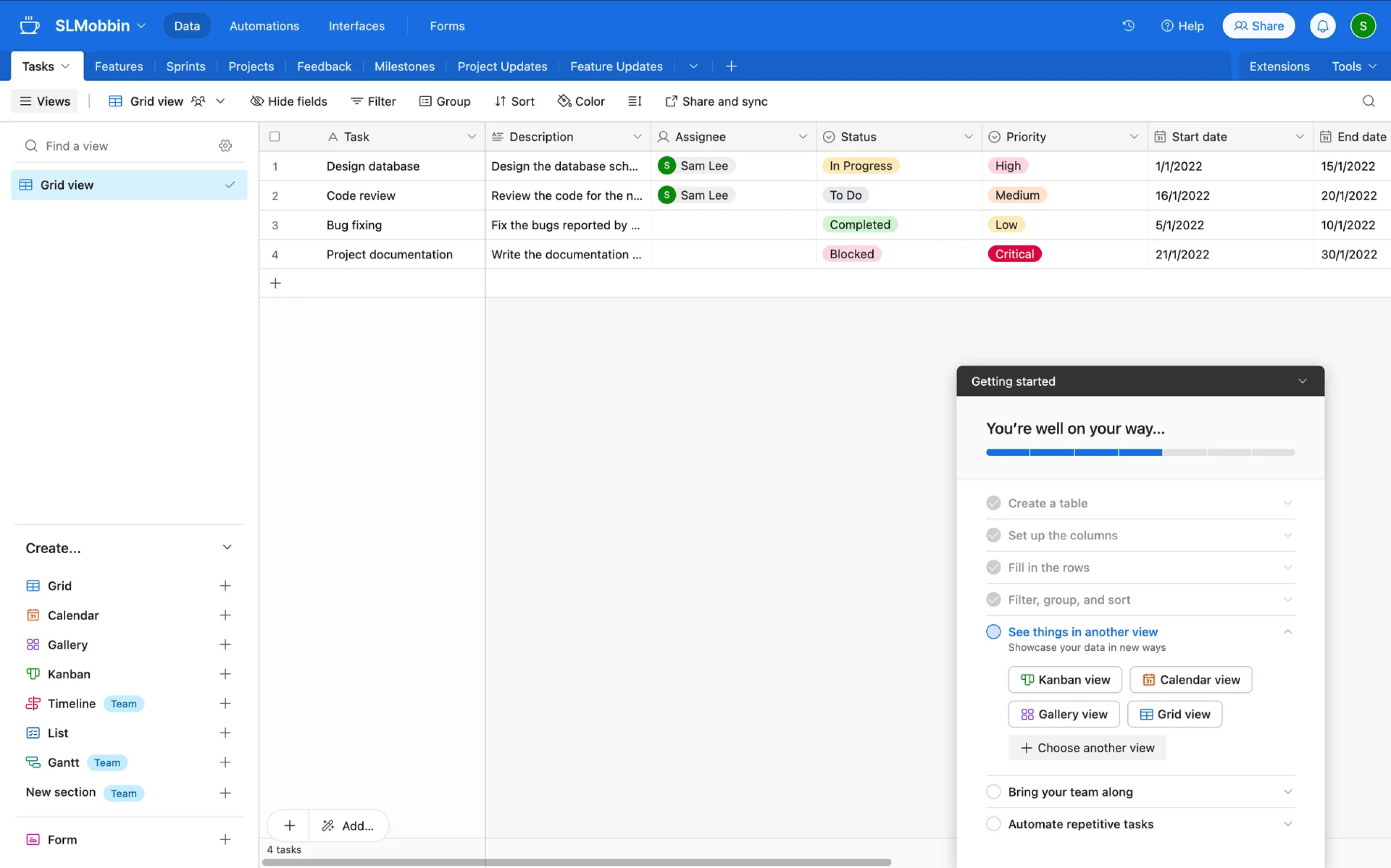

Diving into a more complex screen: Airtable tables. I imagine hours of human work were poured into making the experience as delightful as possible. Automation won’t be sufficient, though, in checking all the different states this screen encompasses. Are the hovers for each separate tab high enough contrast? Will it be able to distinguish the interactions between the main product and in-product onboarding? Can it determine whether the reading order of this page makes logical sense to a user?

Bias is hardwired in

We’ve probably all seen the stories of software alienating specific user groups from biometric identification that can’t tell people of color apart to voice recognition that has trouble distinguishing between dialects.

While bias lives embedded in our minds and society, people have this nifty ability to comprehend self-awareness and intersectional nuances that technology does not (and in my opinion, hopefully, does not ever). People can learn and unlearn all about those and the environment around them and constantly be a part of understanding and dismantling bias in our ever-growing digital world.

There’s humans on the other side

Will having a human testing your software ensure that it’s 100% accessible for 100% of your users? Absolutely not. I myself have used automated accessibility testing tools to help me assess the products I’m working on.

But automated accessibility testing is what it is: just a tool.

What’s important to remember is that there’s actual people using your products and it’ll be people who are best served to understand the complexities of being people. People won’t always get it right on the first try or even the thousandth, but that’s not the point. Creating software products is an iterative process of discovering mistakes, experimenting with new methods, and connecting with others.

Need accessibility testing?

Need accessibility auditing for your digital products and Interested in working with Flagrant? Check out our quick wins page for more details or contact us at +1.844.4FLAGRANT / rave@beflagrant.com.

If you’re looking for a team to help you discover the right thing to build and help you build it, get in touch.

Published on March 19, 2025